Anton Pugh, a M.A. student in Electrical Engineering and member of the MiND Ensemble, has found a unique way to transform his thoughts into reality with the Emotiv EPOC. He’s shared his creative process and source files with the community in hopes that others will build off his ideas. Design Lab 1 now has two fully function EPOC units available upon request.

Concept

I wanted to use EEG (electroencephalogram) signals to produce something tangible. I decided to use a familiar object (a chair), and somehow manipulate it with brain data. I thought an interesting application would be the brain’s reaction to music.

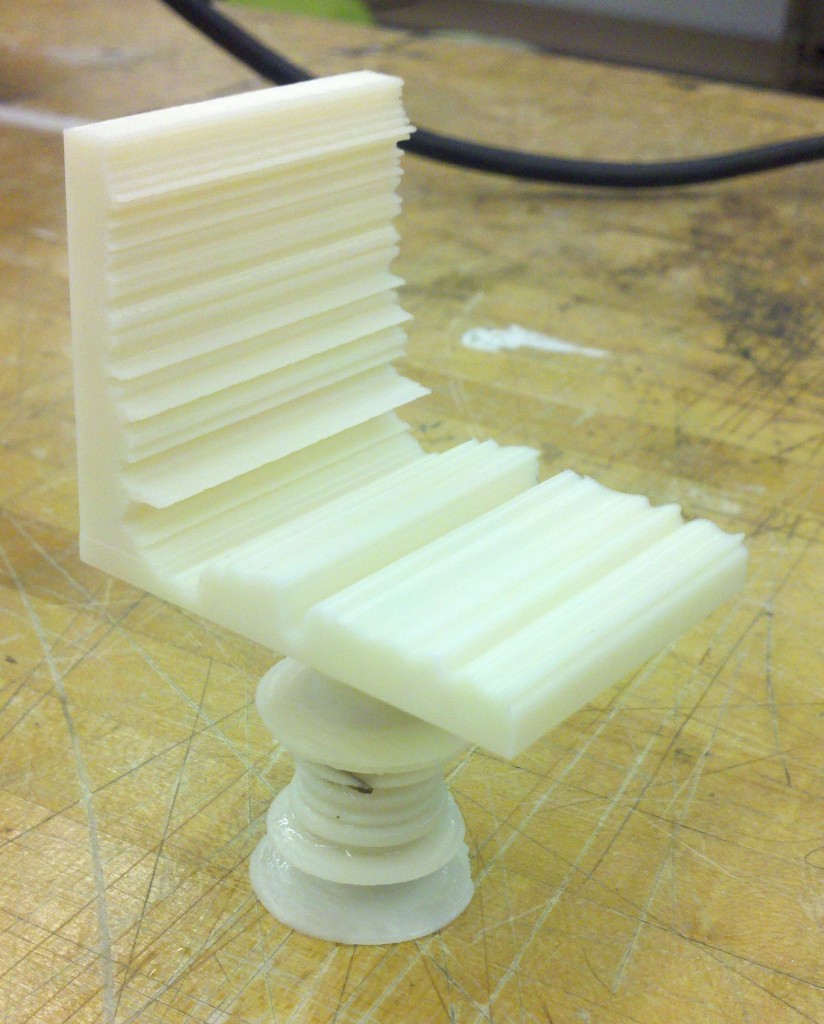

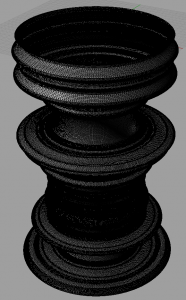

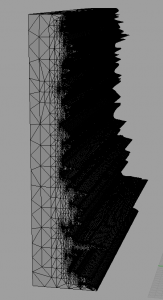

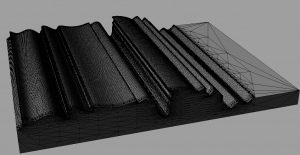

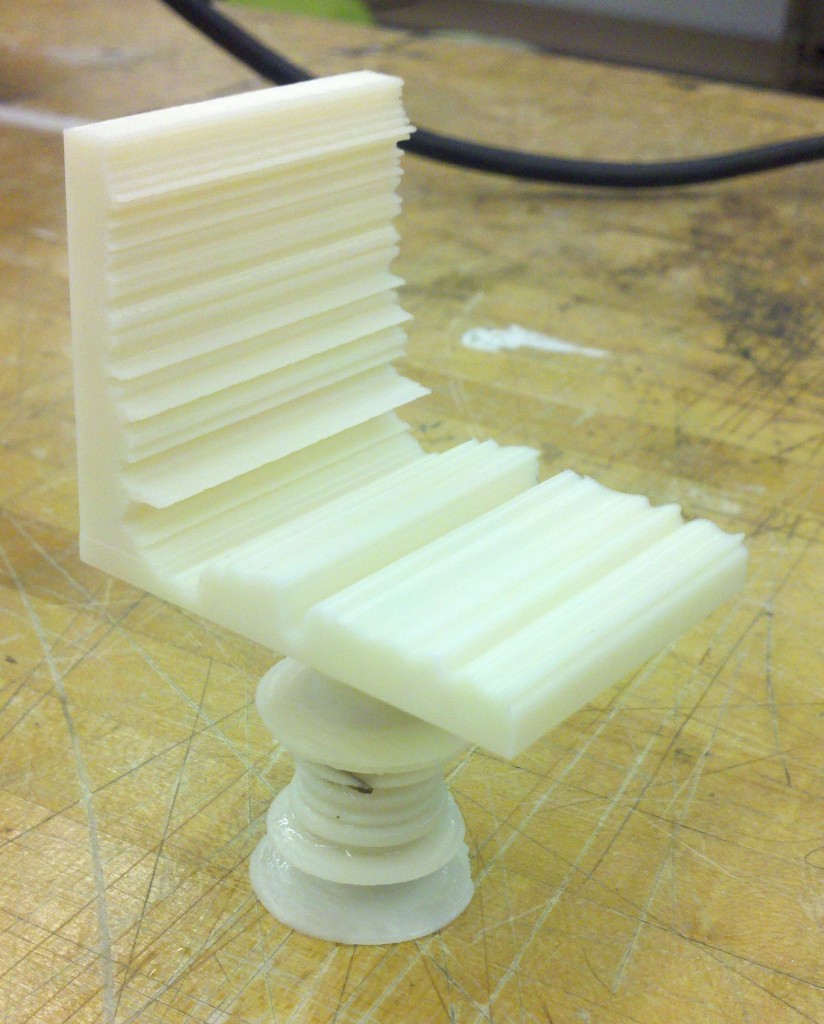

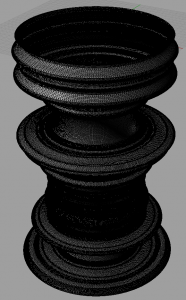

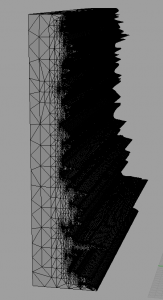

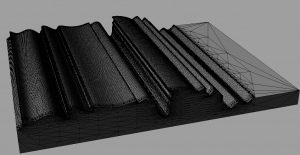

I used a very basic chair with one leg, a seat and a back. The way we interact with chairs is often taken for granted, however the three components of this chair capture three very important aspects of sitting. First, we have the seat, which provides us with support in the many positions we choose to sit in. I used the meditation parameter of my brain waves to shape the seat because underneath everything happening in our brains, meditation is always supporting our thoughts. Second, the back, which we interact with differently depending on our mood and attention. I used the excitement parameter of my brain waves to shape the back because our interaction with the back of a chair is usually the most dynamic. Finally, the leg, which connects the chair to the ground. I used the engagement/boredom parameter of my brain waves to shape the leg because, in a sense, our engagement/boredom determines how present we are in reality.

Technical Description

There are several tools that are readily available for interfacing the Emotiv EPOC with various other hardware. In particular, Mind Your OSC’s translates the brain data to OSC (Open Sound Control) data, which can be interfaced with a variety of musical hardware, as well as processing.

In order to use Mind Your OSC’s I needed to download the Emotiv EPOC SDK, as Mind Your OSC’s gets the data from the Emotiv Control Panel included in the SDK. To get the data into processing I used the oscP5 library for processing by modifying an example project by Joshua Madara.

I collected data while listening to “Did You See The Words?” by Animal Collective, and once the data had been collected, I used 3D modeling software called Rhino to build the chair. The 3D printers in the UM3D lab require STL files as input, which is one of the basic export options in Rhino.

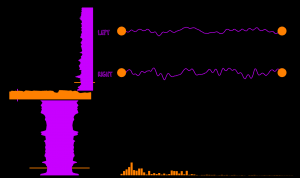

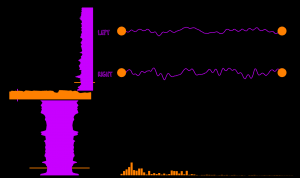

Finally, I created a Processing sketch which plays the song I listened to and shows the point on each piece of the chair at which my brain data is shaping it. For reference, I also used the Minim library for audio analysis and synthesis to display the frequency spectrum and the samples of the song that are playing at any given time.

Result

Overall, I am very pleased with the results. The sculpture alone does not evoke many emotions and really just looks like an uncomfortable chair. However, with the visual aid processing sketch, much more light is shed on what happened during my listening to the song. If this were to be an installment, I would probably embed the visual aid in a web page, so as people view the piece they can view the aid on their smartphone.

Files

Files

awpugh_project_2

awpugh_rhino_files

STL Files are approximately 87 MB (so my upload allotment is not sufficient), but I will provide them upon request.